Why does Gaussian Splatting fail?

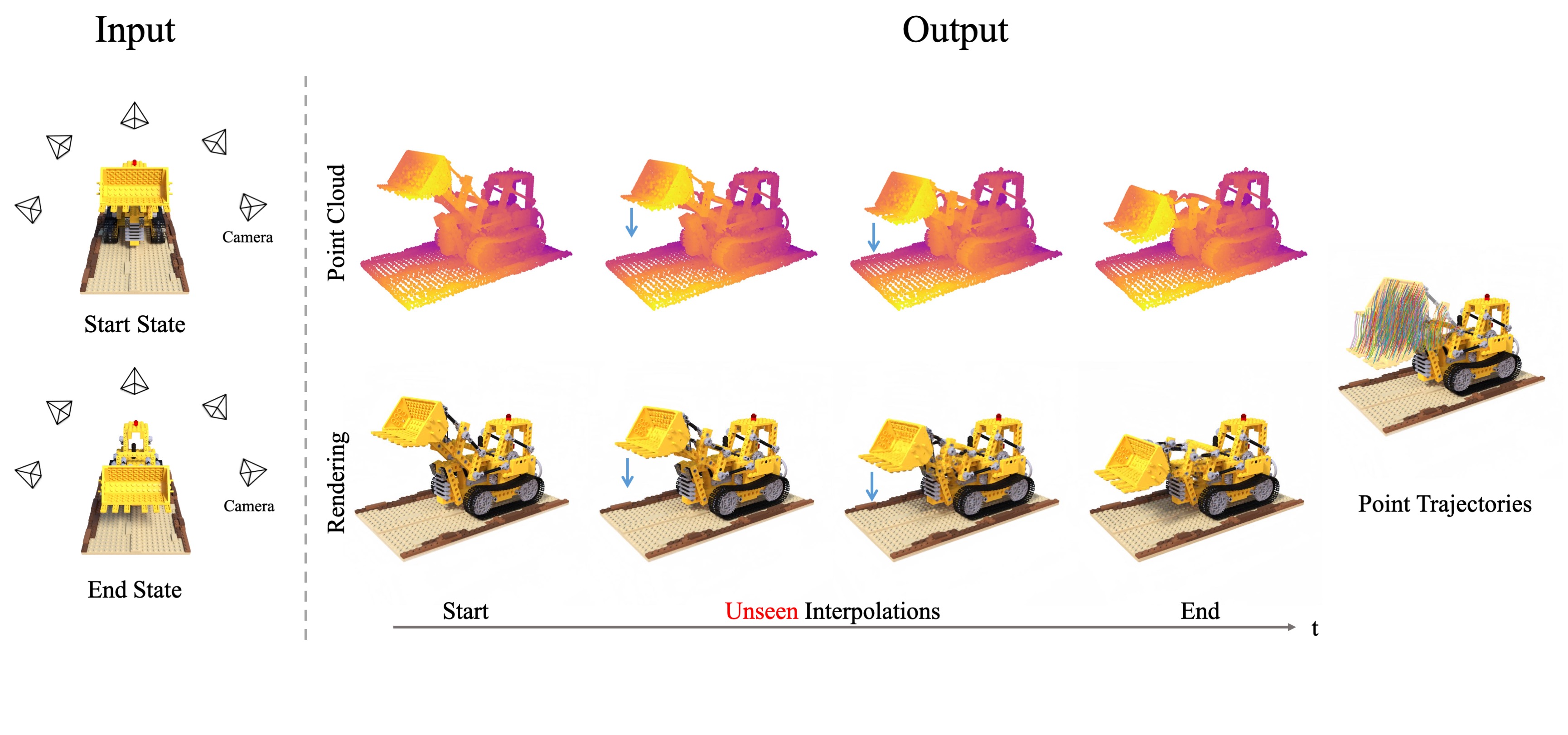

As shown in the results above, methods build on Gaussian Splatting encounter difficulties in generating smooth

point-level scene interpolations. This is due to the following issues:

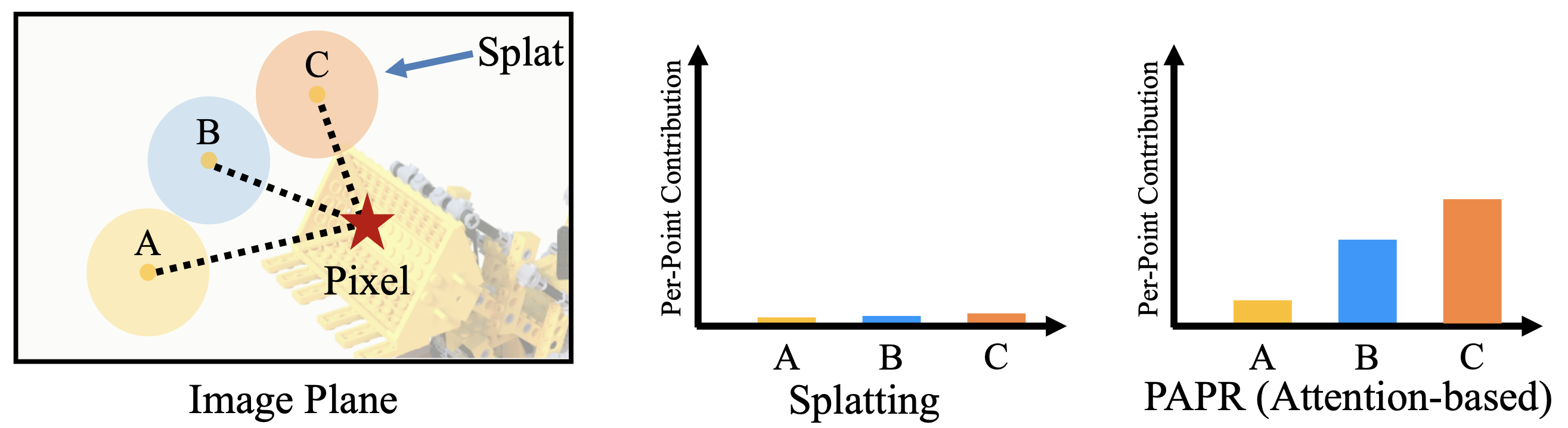

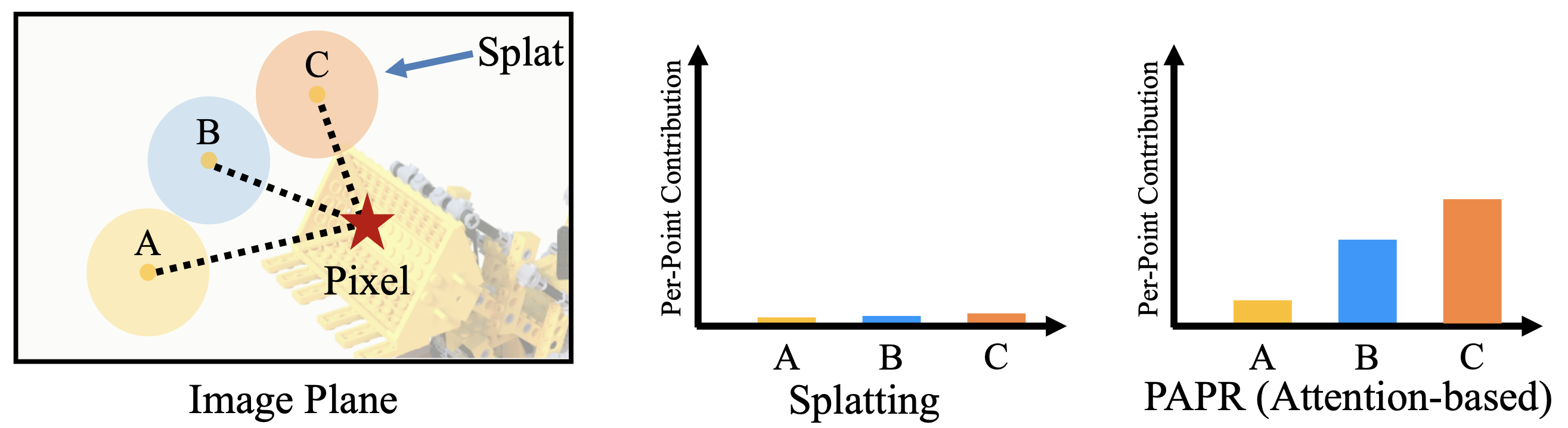

Vanishing Gradient

Consider a pixel with high loss that’s not covered by any splat. Splatting-based methods would fail to move splats to

cover it because of vanishing gradients. PAPR avoids this issue by using an attention mechanism where the total

attention weights always sum up to one, making it more suitable for learning large scene changes.

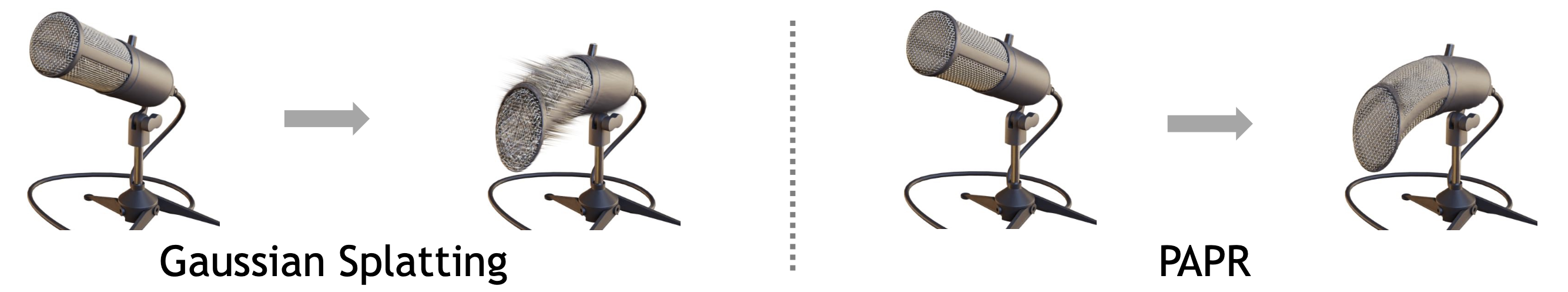

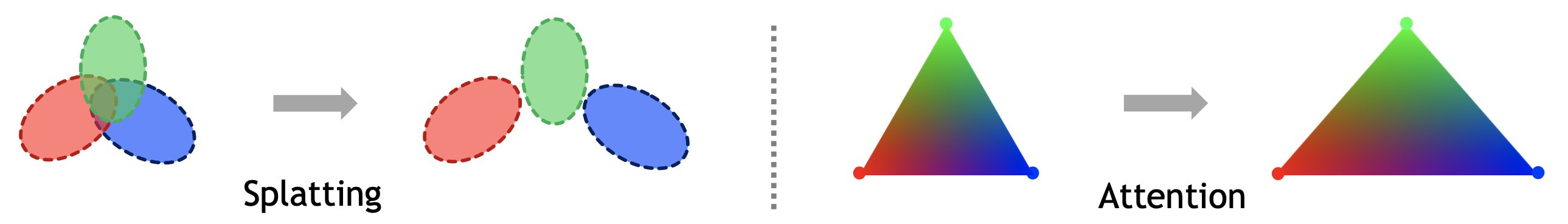

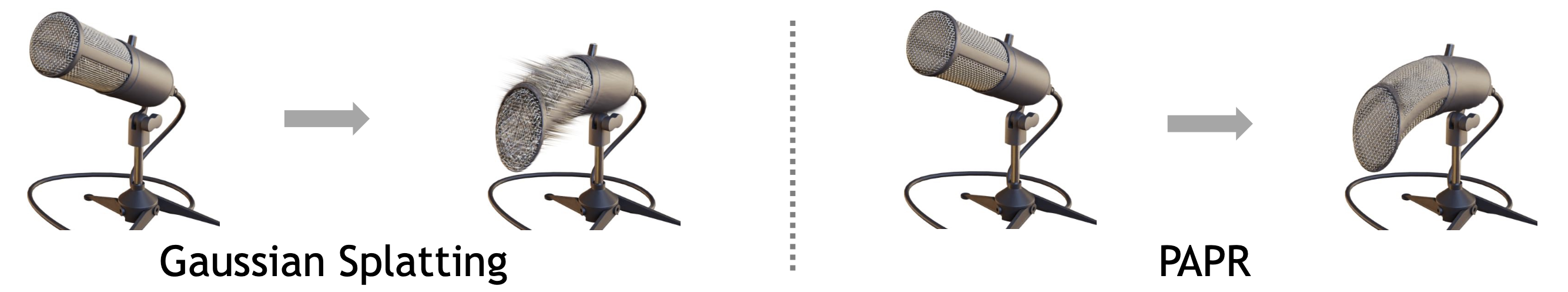

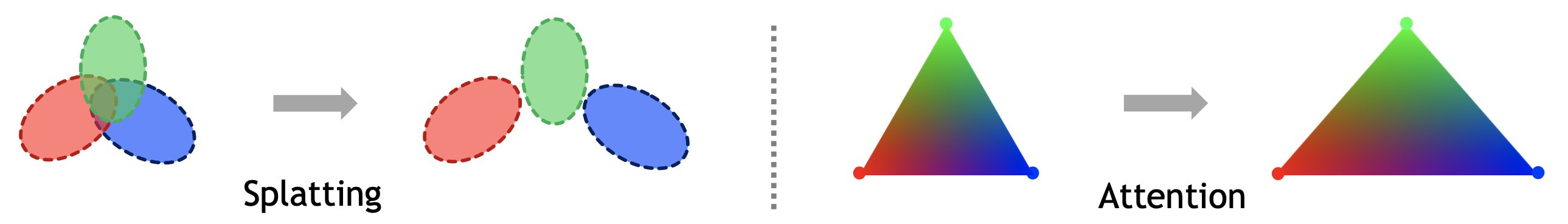

Rendering Breaks Under Non-rigid Transformation

Gaussian splats may no longer be Gaussian after non-rigid transformations and would introduce gaps in rendering. In

contrast, attention-based methods like PAPR render by interpolating nearby points and avoid gaps naturally.

Compared to Gaussian Splatting, PAPR can better preserve rendering quality after non-rigid transformation, allowing

for rendering non-rigid scene changes during the interpolation process.